These days, it seems like everyone is talking about AngularJS and ASP.NET MVC. So in this post we will learn how to combine the best of both worlds and use the goodness of AngularJS in ASP.NET MVC by demonstrating how to use AngularJS in an ASP.NET MVC application. Later in the post, we will see how to access data using the Entity Framework database as a first approach, then we’ll explore how to access the data in AngularJS and then pass to the view using the controller. In short, this post will touch upon:

· adding an AngularJS library in ASP.NET MVC;

· reference of AngularJS, bundling and minification;

· fetching data using the Entity Framework database;

· returning JSON data from an ASP.NET controller;

· consuming JSON data in an AngularJS service;

· using AngularJS service in AngularJS controller to pass data to the view; and

· rendering data on an AngularJS View

To start, let’s create ASP.NET MVC application and right click on the MVC project. From the context menu, click on Manage Nuget Package. Search for the AngularJS package and install into the project.

![]()

After successfully adding the AnngularJS library, you can find those files inside the Scripts folders.

Reference of AngularJS library

You have two options to add an AngularJS library reference in the project: MVC minification and bundling or by adding AngularJS in the Script section of an individual view. If you use bundling, then AngularJS will be available in the whole project. However you have the option to use AngularJS on a particular view as well.

Let’s say you want to use AngularJS on a particular view (Index.cshtml) of the Home controller. First you need to refer to the AngularJS library inside the scripts section as shown below:

@section scripts{

<scriptsrc="~/Scripts/angular.js">script>

}

Next, apply the ng-app directive and any other required directives on the HTML element as shown below:

<divng-app=""class="row">

<inputtype="text"ng-model="name"/>

{{name}}

div>

When you run the application you will find AngularJS is up and running in the Index view. In this approach you will not be able to use AngularJS on the other views because the AngularJS library is only referenced in the Index view.

You may have a requirement to use AngularJS in the whole MVC application. In this case, it’s better to use bundling and minification of MVC and all the AngularJS libraries at the layout level. To do this, open BundleConfig.cs from the App_Start folder and add a bundle for the AngularJS library as shown below:

publicstaticvoid RegisterBundles(BundleCollection bundles)

{

bundles.Add(newScriptBundle("~/bundles/angular").Include(

"~/Scripts/angular.js"));

After adding the bundle in the BundleConfig file, next you need to add the AngularJS bundle in the _Layout.cshtml as listed below:

<head>

<metacharset="utf-8"/>

<metaname="viewport"content="width=device-width, initial-scale=1.0">

<title>@ViewBag.Title - My ASP.NET Applicationtitle>

@Styles.Render("~/Content/css")

@Scripts.Render("~/bundles/modernizr")

@Scripts.Render("~/bundles/angular")

@Scripts.Render("~/bundles/jquery")

@Scripts.Render("~/bundles/bootstrap")

@RenderSection("scripts", required: false)

head>

After creating an AngularJS bundle and referring to it in _Layout.cshtml, you should be able to use AngularJS in the entire application.

Data from Database and in the AngularJS

So far we have seen how to set up AngularJS at a particular view level and the entire application level. Now let’s go ahead and create an end to end MVC application in which we will do the following tasks:

1. Fetch data from the database using the EF database first approach

2. Return JSON from the MVC controller

3. Create an AngularJS service to fetch data using the $http

4. Create an AngularJS controller

5. Create an AngularJS view on the MVC view to display data in the table

Connect to a database using the EF database first approach

To connect to a database with the EF database-first approach, right click on the MVC application and select a new item. From the data tab, you need to select the option ADO.NET Entity Model as shown in the image below:

![]()

From the next screen, select the “EF Designer from database” option.

![]()

On the next screen, click on the New Connection option. To create a new connection to the database:

1. Provide the database server name

2. Choose the database from the drop down. Here we are working with the “School” database, so we’ve selected that from the drop down.

![]()

On the next screen, leave the default name of the connection string and click next.

![]()

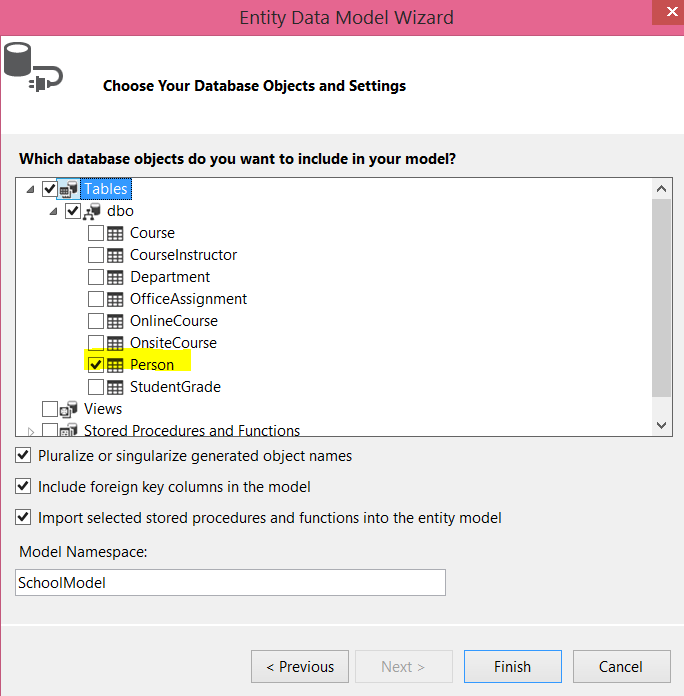

On the next screen, select the tables and other entities you want to keep as the part of the model. To keep it simple I am using only the “Person” table in the model.

![]()

As of now we have created the connection with the database and a model has been added to the project. You should see an edmx file has been added as part of the project.

Return JSON from the MVC controller

To return the Person data as JSON, let us go ahead and add an action in the controller with the return type JsonResult. Keep in mind that you can easily write a Web API to return JSON data; however the purpose of this post is to show you how to work with AngularJS, so I’ll stick with the simplest option, which is creating an action which returns JSON data:

publicJsonResult GetPesrons()

{

SchoolEntities e = newSchoolEntities();

var result = e.People.ToList();

return Json(result, JsonRequestBehavior.AllowGet);

}

Create an AngularJS service to fetch data using the $http

Here I assume that you already have some knowledge about these AngularJS terms, but here’s a quick review/intro of the key concepts:

Controller

A controller is the JavaScript constructor function which contains data and business logic. The controller and the view talk to each other using the $scope object. Each time a controller is used on the view, an instance gets created. So if we use it 10 times, 10 instances of the constructor will get created.

Service

A service is the JavaScript function by which an instance gets created once per application life cycle. Anything shared across the controller should be part of the service. A service can be created in five different ways. The most popular way is by using the service method or the factory method. AngularJS provides many built in services also: for example, the $http service can be used to call a HTTP based service from an Angular app, but a service must be injected before it is used.

Modules

Modules are the JavaScript functions which contain other functions like a service or a controller. There should be at least one module per Angular app.

Note: These are the simplest definitions of these AngularJS concepts. You can find more in depth information on the web.

Now let’s start creating the module! First, right-click on the project and add a JavaScript file. You can call it anything you’d like, but in this example, let’s call it StudentClient.js.

In the StudentClient.js we have created a module and a simple controller. Later we will modify the controller to fetch the data from the MVC action.

var StudentApp = angular.module('StudentApp', [])

StudentApp.controller('StudentController', function ($scope) {

$scope.message = "Infrgistics";

});

To use the module and the controller on the view, first you need to add the reference of the StudentClient.js and then set the value of ng-app directive to the module name StudentApp. Here’s how you do that:

@section scripts{

<scriptsrc="~/StudentClient.js">script>

}

<divng-app="StudentApp"class="row">

<divng-controller="StudentController">

{{message}}

div>

div>

At this point if you run the application, you will find Infragistics rendered on the view. Let’s start with creating the service. We will create the custom service using the factory method. In the service, using the $http in-built service will call the action method of the controller. Here we’re putting the service in the same StudentService.js file.

StudentApp.factory('StudentService', ['$http', function ($http) {

var StudentService = {};

StudentService.getStudents = function () {

return $http.get('/Home/GetPersons');

};

return StudentService;

}]);

Once the service is created, next you need to create the controller. In the controller we will use the custom service and assign returned data to the $scope object. Let’s see how to create the controller in the code below:

StudentApp.controller('StudentController', function ($scope, StudentService) {

getStudents();

function getStudents() {

StudentService.getStudents()

.success(function (studs) {

$scope.students = studs;

console.log($scope.students);

})

.error(function (error) {

$scope.status = 'Unable to load customer data: ' + error.message;

console.log($scope.status);

});

}

});

Here we’ve created the controller, service, and module. Putting everything together, the StudentClient.js file should look like this:

var StudentApp = angular.module('StudentApp', []);

StudentApp.controller('StudentController', function ($scope, StudentService) {

getStudents();

function getStudents() {

StudentService.getStudents()

.success(function (studs) {

$scope.students = studs;

console.log($scope.students);

})

.error(function (error) {

$scope.status = 'Unable to load customer data: ' + error.message;

console.log($scope.status);

});

}

});

StudentApp.factory('StudentService', ['$http', function ($http) {

var StudentService = {};

StudentService.getStudents = function () {

return $http.get('/Home/GetPersons');

};

return StudentService;

}]);

On the view, we can use the controller as shown below, but keep in mind that we are creating an AngularJS view on the Index.cshtml. The view can be created as shown below:

@section scripts{

<scriptsrc="~/StudentClient.js">script>

}

<divng-app="StudentApp"class="container">

<br/>

<br/>

<inputtype="text"placeholder="Search Student"ng-model="searchStudent"/>

<br/>

<divng-controller="StudentController">

<tableclass="table">

<trng-repeat="r in students | filter : searchStudent">

<td>{{r.PersonID}}td>

<td>{{r.FirstName}}td>

<td>{{r.LastName}}td>

tr>

table>

div>

div>

On the view, we are using ng-app, ng-controller, ng-repeat, and ng-model directives, along with “filter” to filter the table on the input entered in the textbox. Essentially these are the steps required to work with AngularJS in ASP.NET MVC application.

Conclusion

In this post we focused on a few simple but important steps to work with AngularJS and ASP.NET MVC together. We also touched upon the basic definitions of some key AngularJS components, the EF database-first approach, and MVC. In further posts we will go into more depth on these topics, but I hope this post will help you in getting started with AngularJS in ASP.NET MVC. Thanks for reading!

![]()

![]()

Being only twenty-one years old, I’ve pretty much grown up with technology. While I do remember the brutal days of dial up, when texting didn’t exist and I had to actually remember a phone number, I have been pretty thoroughly connected with technology since I was young. I received my first cell phone for my eleventh birthday a little over ten years ago—a Nokia with a tiny, blue screen, with the most advanced features on this little phone being the games ‘Snake’ and ‘Doodle’. I remember the popularity of Napster and Limewire, both being controversial yet game changing methods of exchanging content and information. I remember using AOL Instant Messenger as a way to stay connected with my friends. I remember playing PlayStation with my dad and thinking about how cool it would be to be able to somehow play against my friends even though they were not with me.

Being only twenty-one years old, I’ve pretty much grown up with technology. While I do remember the brutal days of dial up, when texting didn’t exist and I had to actually remember a phone number, I have been pretty thoroughly connected with technology since I was young. I received my first cell phone for my eleventh birthday a little over ten years ago—a Nokia with a tiny, blue screen, with the most advanced features on this little phone being the games ‘Snake’ and ‘Doodle’. I remember the popularity of Napster and Limewire, both being controversial yet game changing methods of exchanging content and information. I remember using AOL Instant Messenger as a way to stay connected with my friends. I remember playing PlayStation with my dad and thinking about how cool it would be to be able to somehow play against my friends even though they were not with me.