In this article, we will learn how to create an ASP.NET Web API using the Repository pattern and the Entity Framework code first approach. Essentially you’ll learn how to:

1. Create a core project which will contain entity and the repository interface;

2. Create an Infrastructure project which will contain database operations code using the Entity Framework code first approach;

3. Create a Web API to perform CRUD operations on the entity;

4. Consume the Web API in a jQuery application and render the data in the Ignite UI Chart.

What is a Repository pattern?

Let us first understand why we need a Repository pattern. If you do not follow a Repository pattern and directly use the data then the following problems may arise-

· Duplicate code

· Difficulty implementing any data related logic or policies such that caching

· Difficulty in unit testing the business logic without having the data access layer

· Tightly coupled business logic and database access logic

By implementing a repository pattern we can avoid the above problems and get the following advantages:

· Business logic can be unit tested without data access logic

· Database access code can be reused

· Database access code is centrally managed so easy to implement any database access policies such that caching

· Easy to implement domain logics

· Domain entities or business entities are strongly typed with the annotations.

Now that we’ve listed how great they are, let’s go ahead and start implanting a repository pattern in the ASP.NET Web API.

Create the Core project

In the core project you should keep the entities and the repository interfaces. In this example we are going to work with the City entity. So let us create a class City as shown in the listing below:

using System.ComponentModel.DataAnnotations;

namespace WebApiRepositoryPatternDemo.Core.Entities

{

publicclassCity

{

publicint Id { get; set; }

[Required]

publicstring Name { get; set; }

publicstring Country { get; set; }

publicint Population01 { get; set; }

publicint Population05 { get; set; }

publicint Population10 { get; set; }

publicint Population15 { get; set; }

}

}

As you see we are annotating data using the Required attribute which is part of System.ComponentModel.DataAnnotations. We can put annotations on the city entity using either of two approaches:

1. Using the System.ComponentModel.DataAnnotations

2. Using the Entity Framework Fluent API

Both approaches have their own advantages. If you consider that restriction on the domain entities is part of the domain, then use data annotations in the core project. However if you consider restrictions are related to database and use the Entity framework as database technology then go for a fluent API.

Next let’s go ahead and create the repository interface. Whatever operation you want to perform on the City entity should be part of the repository interface. ICityRepository interface can be created as shown in the listing below:

using System.Collections.Generic;

using WebApiRepositoryPatternDemo.Core.Entities;

namespace WebApiRepositoryPatternDemo.Core.Interfaces

{

publicinterfaceICityRepository

{

void Add(City b);

void Edit(City b);

void Remove(string Id);

IEnumerable<City> GetCity();

City FindById(int Id);

}

}

Keep in mind that the Core project should never contain any code related to database operations. Hence the following references should not be the part of the core project-

· Reference to any external library

· Reference to any database library

· Reference to any ORM like LINQ to SQL, entity framework etc.

After adding the entity class and the repository interface, the core project should look like the image below:

![]()

Create the Infrastructure project

In the infrastructure project, we perform operations which are related to outside the application. For example:

· Database operations

· Consuming web services

· Accessing File systems

To perform the database operation we are going to use the Entity Framework Code First approach. Keep in mind that we have already created the city entity on which CRUD operations are needed to be performed. Essentially to enable CRUD operations, the following classes are required:

· DataContext class

· Repository class implementing Repository interface created in the core project

· DataBaseInitalizer class

We then need to add the following references in the infrastructure project:

· A reference of the Entity framework. To add this, right click on the project and click on manage Nuget package and then install Entity framework

· A reference of the core project

DataContext class

In the DataContext class:

1. Create a DbSet property which will create the table for City entity

2. In the constructor of the DataContext class, pass the connection string name where database would be created

3. CityDataContext class will inherit the DbContext class

The CityDataContext class can be created as shown in the listing below:

using System.Data.Entity;

using WebApiRepositoryPatternDemo.Core.Entities;

namespace WebApiRepositoryPatternDemo.Infrastructure

{

publicclassCityDataContext : DbContext

{

public CityDataContext() : base("name=cityconnectionstring")

{

}

publicIDbSet<City> Cities { get; set; }

}

}

Optionally, you can either pass the connection string or rely on the Entity Framework to create the database. We will set up the connection string in app.config of the infrastructure project. Let us go ahead and set the connection string as shown in the below listing:

<connectionStrings>

<addname="cityconnectionstring"connectionString="Data Source=(LocalDb)\v11.0;Initial Catalog=CityPolulation;Integrated Security=True;MultipleActiveResultSets=true"providerName="System.Data.SqlClient"/>

connectionStrings>

Database initialize class

We need to create a database initialize class to feed the database with some initial value at time of the creation. To create the Database initialize class, create a class and inherit it from DropCreateDatabaseIfModelChnages. Here we’re setting the value so that if the model changes recreate the database. We can explore the other options of the entity framework also and inherit the database initialize class from the cross ponding class. In the Seed method we can set the initial value for the Cities table. With a record of three cities, the Database initializer class can be created as shown in the listing below:

using System.Data.Entity;

using WebApiRepositoryPatternDemo.Core.Entities;

namespace WebApiRepositoryPatternDemo.Infrastructure

{

publicclassCityDbInitalize : DropCreateDatabaseIfModelChanges<CityDataContext>

{

protectedoverridevoid Seed(CityDataContext context)

{

context.Cities.Add(

newCity

{

Id = 1,

Country = "India",

Name = "Delhi",

Population01 = 20,

Population05 = 22,

Population10 = 25,

Population15 = 30

});

context.Cities.Add(

newCity

{

Id = 2,

Country = "India",

Name = "Gurgaon",

Population01 = 10,

Population05 = 18,

Population10 = 20,

Population15 = 22

});

context.Cities.Add(

newCity

{

Id = 3,

Country = "India",

Name = "Bangalore",

Population01 = 8,

Population05 = 20,

Population10 = 25,

Population15 = 28

});

context.SaveChanges();

base.Seed(context);

}

}

}

Repository class

So far we have created the DataContext and DatabaseInitalize class. We will use the DataContext class in the repository class. The CityRepository class will implement the ICityRepository interface from the core project and perform CRUD operations using the DataContext class. The CityRepository class can be implemented as shown in the listing below:

using System.Collections.Generic;

using System.Linq;

using WebApiRepositoryPatternDemo.Core.Entities;

using WebApiRepositoryPatternDemo.Core.Interfaces;

namespace WebApiRepositoryPatternDemo.Infrastructure.Repository

{

publicclassCityRepository : ICityRepository

{

CityDataContext context = newCityDataContext();

publicvoid Add(Core.Entities.City b)

{

context.Cities.Add(b);

context.SaveChanges();

}

publicvoid Edit(Core.Entities.City b)

{

context.Entry(b).State = System.Data.Entity.EntityState.Modified;

}

publicvoid Remove(string Id)

{

City b = context.Cities.Find(Id);

context.Cities.Remove(b);

context.SaveChanges();

}

publicIEnumerable<Core.Entities.City> GetCity()

{

return context.Cities;

}

public Core.Entities.City FindById(int Id)

{

var c = (from r in context.Cities where r.Id == Id select r).FirstOrDefault();

return c;

}

}

}

Implementation of the CityRepository class is very simple. You can see that we’re using the usual LINQ to Entity code to perform the CRUD operations. After implementing all the classes, the infrastructure project should look like the image below:

![]()

Create the WebAPI project

Now let’s go ahead and create the Web API project. To get started we need to add the following references:

1. Reference of the core project

2. Reference of the infrastructure project

3. Reference of the Entity framework

After adding all the references in the Web API project, copy the connection string (added in the previous step cityconnectionstring) from the APP.Config of the infrastructure project to the web.config of the Web API project. Next open the Global.asax file and in the Application_Start() method, add the below lines of code to make sure that seed data has been inserted to the database:

CityDbInitalize db = newCityDbInitalize();

System.Data.Entity.Database.SetInitializer(db);

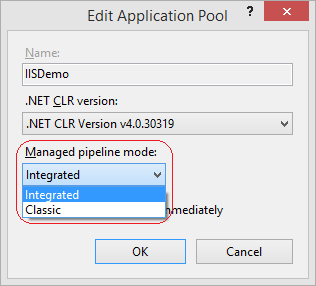

At this point, build the Web API project then right click on the Controllers folder and a new controller. Create a new controller with the scaffolding, choosing Web API 2 Controller with actions, using Entity Framework option as shown in the image below:

![]()

Next, to add the Controller, select the City class as the Model class and the CityDataContext class as the Data context class.

![]()

Once you click on Add, you will find a Web API controller has been created with the name CitiesController in the Controllers folder. At this point when you go ahead and run the Web API, you should able to GET the Cities in the browser as shown in the image below:

![]()

Here we have created the Web API using the Entity Framework CodeFirst approach and the Repository pattern. You can perform CRUD operations by performing POST, GET, PUT, and DELETE operations on the api/cities URL as shown in the image below:

![clip_image011 clip_image011]()

JQuery Client to consume Web API

Now let’s go ahead and display the population of the cities in an igChart. I added references of the IgniteUI JS and CSS files on the HTML as shown in the below listing:

<title>igGrid CRUD Demotitle>

<linkhref="Content/Infragistics/css/themes/infragistics/infragistics.theme.css"rel="stylesheet"/>

<linkhref="Content/Infragistics/css/structure/infragistics.css"rel="stylesheet"/>

<linkhref="Content/bootstrap.min.css"rel="stylesheet"/>

<scriptsrc="Scripts/modernizr-2.7.2.js">script>

<scriptsrc="Scripts/jquery-2.0.3.js">script>

<scriptsrc="Scripts/jquery-ui-1.10.3.js">script>

<scriptsrc="Scripts/Infragistics/js/infragistics.core.js">script>

<scriptsrc="Scripts/Infragistics/js/infragistics.dv.js">script>

<scriptsrc="Scripts/Infragistics/js/infragistics.lob.js">script>

<scriptsrc="Scripts/demo.js">script>

In the Body element, I added as table as shown in the listing below:

<table>

<tr>

<tdid="columnChart"class="chartElement">td>

<tdid="columnLegend"style="float: left">td>

tr>

table>

To convert the table to the igChart in the document ready function of jQuery, we need to select the table and convert that to igChart as shown in the listing below:

$("#columnChart").igDataChart({

width: "98%",

height: "350px",

dataSource: "http://localhost:56649/api/Cities",

legend: { element: "columnLegend" },

title: "Cities Population",

subtitle: "Population of Indian cities",

axes: [{

name: "xAxis",

type: "categoryX",

label: "Name",

labelTopMargin: 5

}, {

name: "yAxis",

type: "numericY",

title: "in Millions",

}],

series: [{

name: "series1",

title: "2001",

type: "column",

isHighlightingEnabled: true,

isTransitionInEnabled: true,

xAxis: "xAxis",

yAxis: "yAxis",

valueMemberPath: "Population01"

}, {

name: "series2",

title: "2005",

type: "column",

isHighlightingEnabled: true,

isTransitionInEnabled: true,

xAxis: "xAxis",

yAxis: "yAxis",

valueMemberPath: "Population05"

}, {

name: "series3",

title: "2010",

type: "column",

isHighlightingEnabled: true,

isTransitionInEnabled: true,

xAxis: "xAxis",

yAxis: "yAxis",

valueMemberPath: "Population10"

},

{

name: "series4",

title: "2015",

type: "column",

isHighlightingEnabled: true,

isTransitionInEnabled: true,

xAxis: "xAxis",

yAxis: "yAxis",

valueMemberPath: "Population15"

}]

});

Here we are setting the following properties to create the chart:

· The data source property is set to the API/Cities to fetch all Cities information on the HTTP GET operation

· The legend of the chart as column legend

· The height and width of the chart

· The title and sub title of the chart

· The XAxis and YAxis of the chart

· We also created the series by setting the following properties:

o Name

o Title

o Type

o valueMemberPath – It must be set to the numeric column name of the City entity.

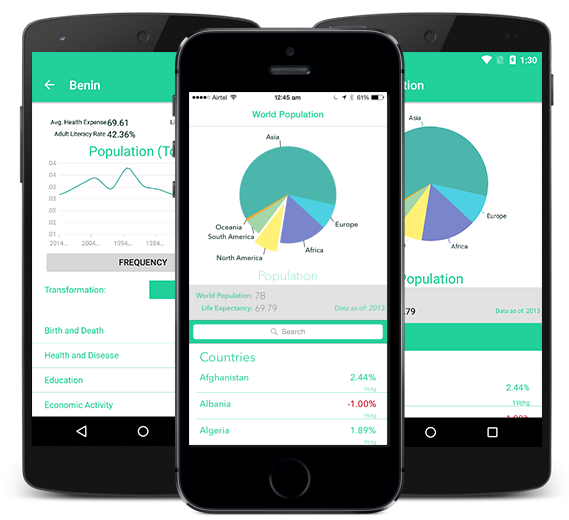

On running the application, you will find that City data from the Web API has been shown rendered in the chart as shown in the image below:

![]()

Conclusion

There you have it! In this post we learned how to create ASP.NET Web API using the Repository pattern and Entity Framework code first approach. I hope you find this post useful, and thanks for reading!

![]()

![]()